How we optimized our Private Cloud

to provide high performance webhosting on a PHP / LEMP stack

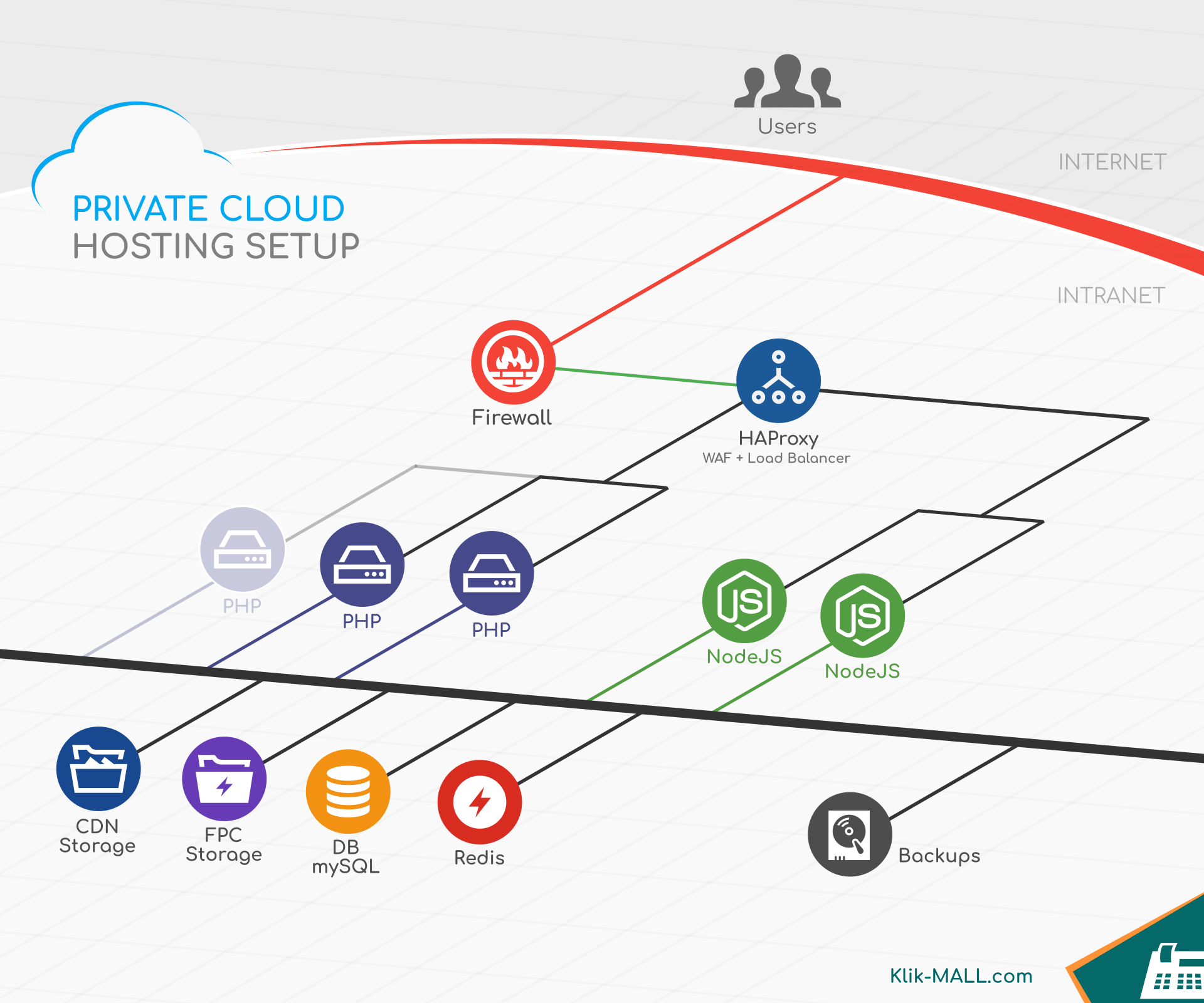

This is an overview of our private webhosting cloud for clients using Klik MALL SaaS platform for their websites and webshops.

It can also serve as a blueprint for high performance Wordpress, WooCommerce, Magento setups as these also run on PHP / LEMP.

Quick summary

To highlight the added value of a setup as this private cloud, here are the main differences in comparison to a common "All-in-one" Shared/VPS webhosting:

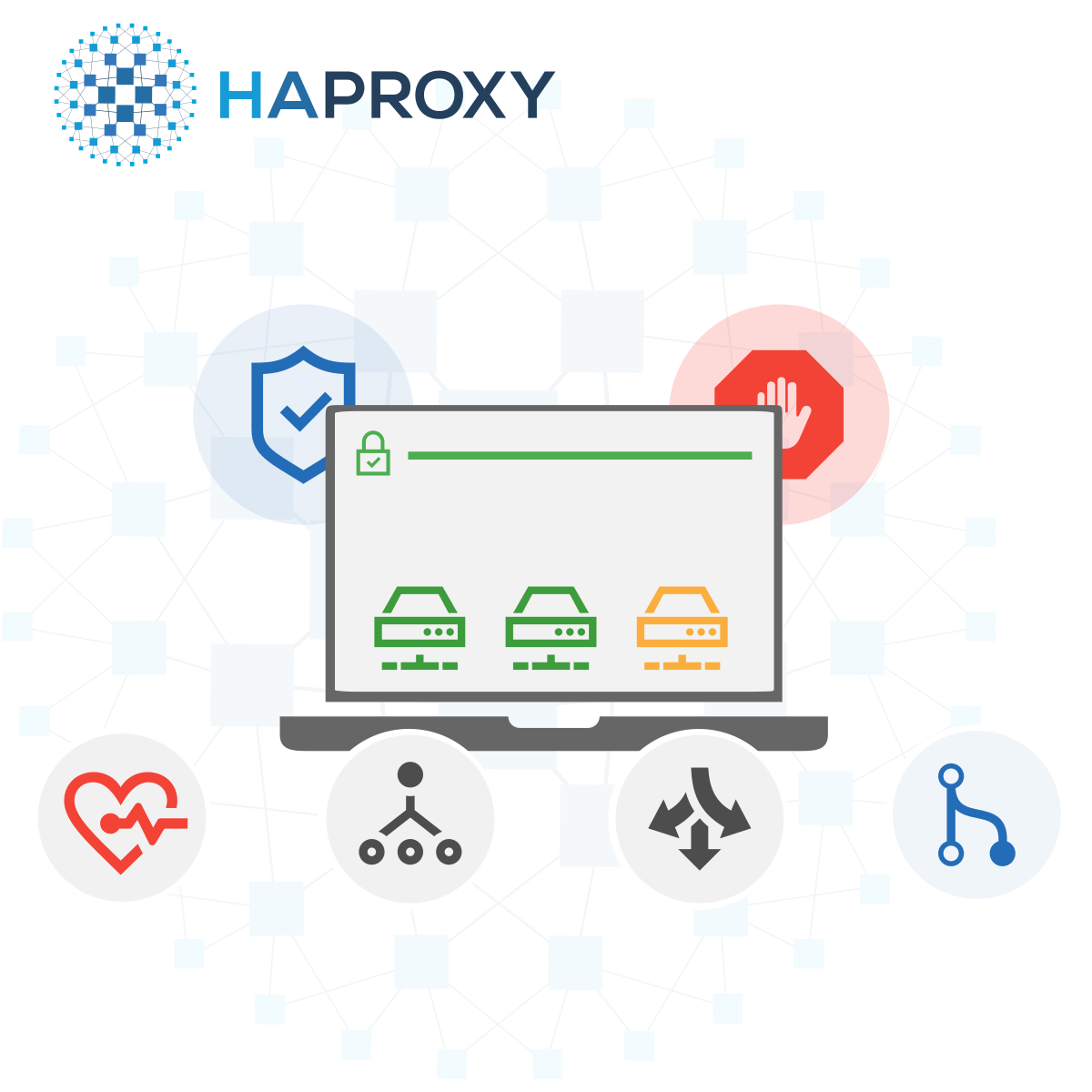

- HAProxy is a special, multi purpose server. At first it's used as a WAF (Web Application Firewall) that protects resources from "usage abusers", "useless traffic by bots" etc. Then it's used as a load balancer that provides horizontal scaling for PHP code execution, NodeJS services etc. It also gives us an option to use smaller, but faster and better-suited CPUs to provide better UX with faster loadings.

- Using the fastest CPU for PHP can reduce TTFB by 30%+ compared to common hosting CPUs. Important thing to notice here is that "best CPU for the job" might not be suitable for common all-in-one hosting packages, but is a perfect fit in a cluster setup like one described below.

- Using Intel Optane NVMe for Database means using the single best storage drive for this workload. Having enough RAM and optimized queries are 2 best things you can do for database performance. Intel Optane is next in that line.

- A special filesystem (ZFS) for NAS/media files to provide best "bang for buck" CDN for static files.

We divided core tasks for serving a website/webshop and optimized it in terms of software & hardware to provide max. performance + expandability for bigger loads.

Continue if you want to find out more about how this setup works.

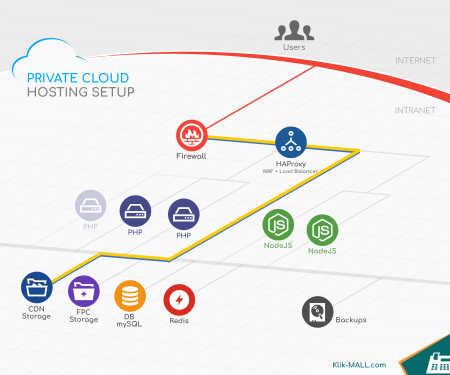

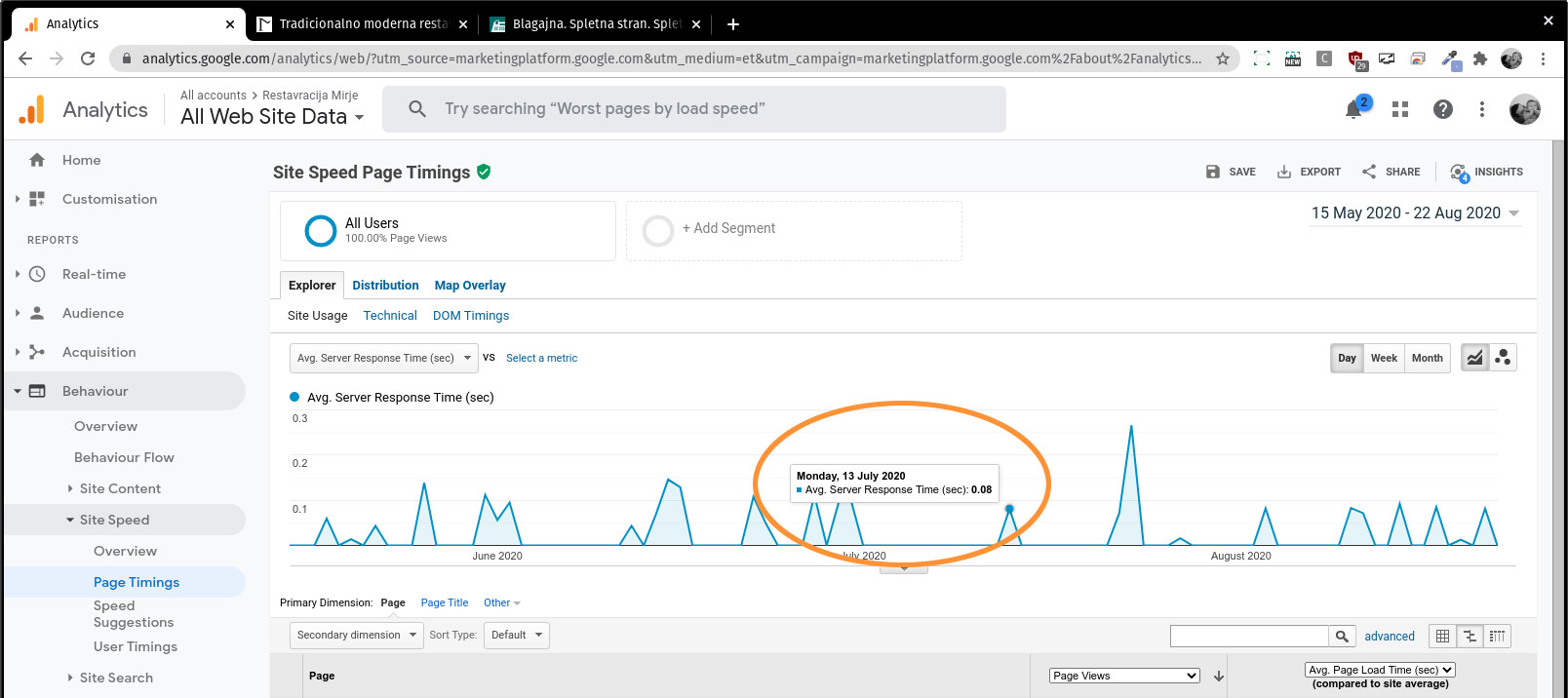

Common path of a web request through the cloud

- Common path of a web request through the cloud

-

1Firewall passes https (80) and https (443) traffic to HAProxy.

-

2HAProxy checks users's request with WAF rules. If all OK, it selects a backend to process the request.

-

3Main app. runs on PHP backend servers which are load-balanced in "Sticky Session" mode.

-

4Data is stored on NAS/CDN server, FullPageCache storage, DB, Redis and others.

-

5PHP processes the request and returns a response to HAProxy. It compresses and encrypts response for the user.

-

6One exception is direct serving of static files via CDN server. This path is: HAProxy > CDN > HAProxy > User.

-

7NodeJS servers are used for supporting services like exporting invoices (e.g. HTML2PDF) and load-balanced in "Round Robin" mode.

- LB - load balancer

- WAF rules: whitelist, blacklist, good/bad bots, usage rate...

- Sticky Session mode.

- Round Robin mode.